Bayes, bits & brains

This site is about probability and information theory. We'll see how they help us understand machine learning and the world around us.

A few riddles

More about the content, prerequisites, and logistics later. I hope you get a feel for what this is about by checking out the following riddles. I hope some of them nerd-snipe you! 😉 You will understand all of them at the end of this minicourse.

Onboarding

As we go through the mini-course, we'll revisit each puzzle and understand what's going on. But more importantly, we will understand some important pieces of mathematics and get solid theoretical background behind machine learning.

Here are some questions we will explore.

- What's KL divergence, entropy and cross-entropy? What's the probabilistic intuition behind them? (chapters 1-3)

- Where do powerful principles like maximum likelihood & entropy come from? (chapters 4-5)

- Why do we use logits, softmax, and Gaussians all the time? (chapter 5)

- How to set up loss functions? (chapter 6)

- How coding works and what intuitions it gives about LLMs? (chapter 7)

- How to think about compression and what it tells us about machine learning and statistics? (chapter 8)

What's next?

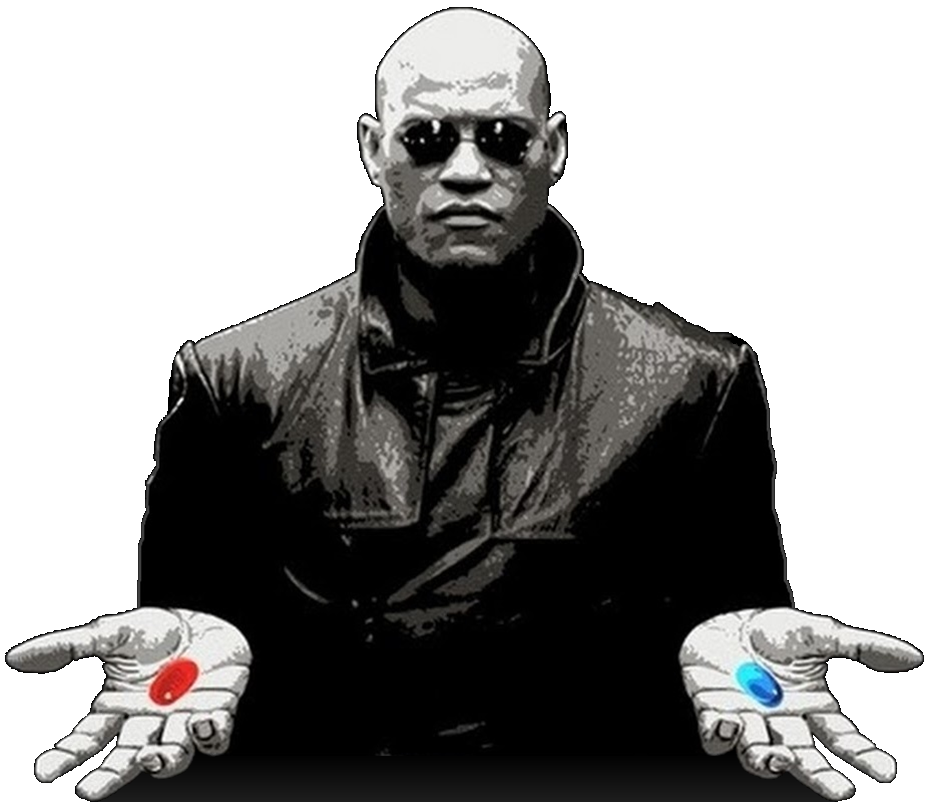

This is your last chance. You can go on with your life and believe whatever you want to believe about KL divergence. Or you go to the first chapter and see how far the rabbit-hole goes.